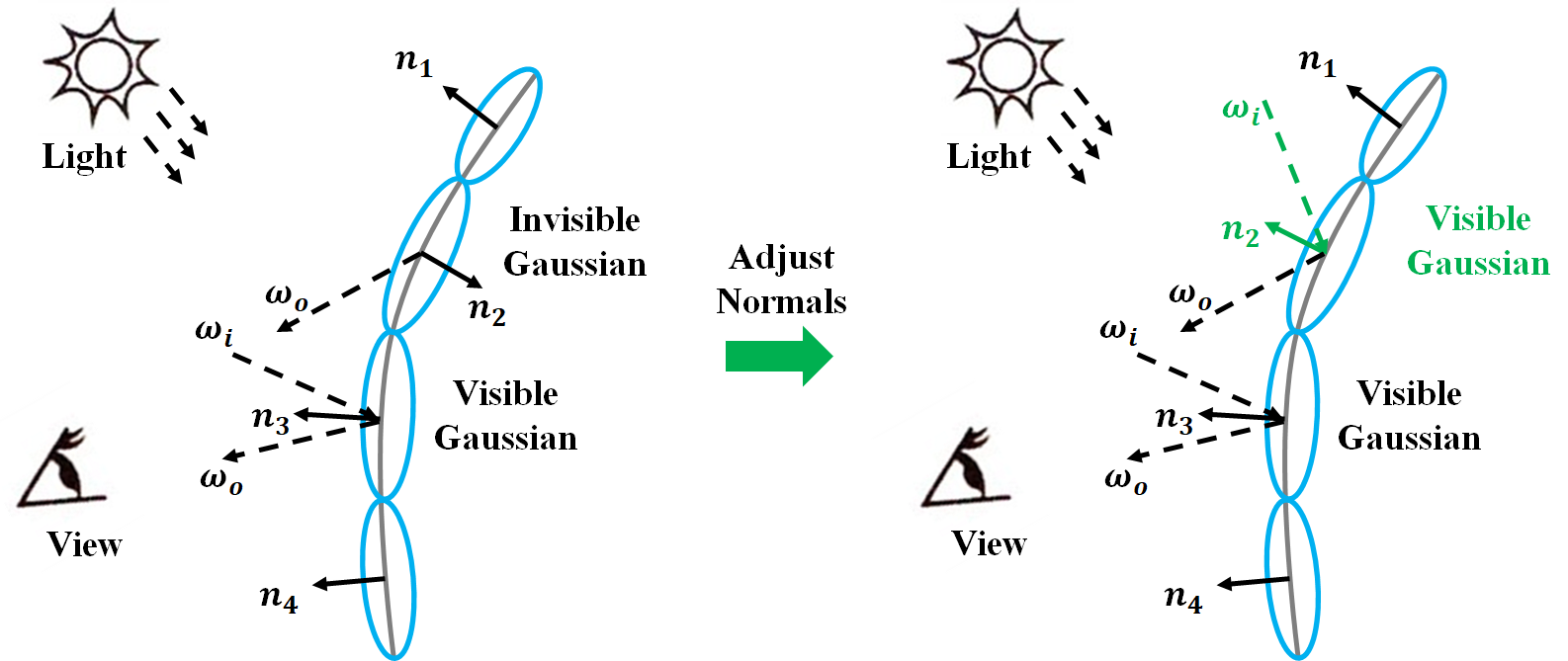

Normal Estimation

Gaussians present on the surface and observed from the viewpoint should be visible. Any Gaussian that is not visible, as determined by $\mathbf{n} \cdot \mathbf{v} \leq 0$, does not contribute to color calculations. Therefore, the normal direction of these invisible Gaussians needs to be modified in order to reconstruct the desired image.